Antithesis driven testing

Disclaimer: I’m a customer of Antithesis, have participated in their Customer Advisory Board, and have a friendly relationship with the team. That said, this post is entirely my own—unpaid, unsolicited, and written because I believe the tech is worth sharing.

I want a test system smart enough to discover the bugs I can’t anticipate.

A system that can simulate the full chaos of the internet—flaky networks, machine crashes, race conditions—while simultaneously learning how to be the worst possible horde of users. A testing pattern that can give me confidence that I won’t be woken up at 3 AM for a production outage or discover I’ve silently been losing data.

That’s the bar Graft has to meet, and this is the story of my experience using Antithesis to test Graft.

What’s Graft?Graft is an open-source transactional storage engine optimized for lazy, partial, and strongly consistent replication. It’s designed for edge, offline-first, and distributed applications, meaning it must survive partial writes, network partitions, system crashes, and bad timing. The kind of problems you don’t write test cases for—you simulate them.

Learn more about Graft

#DST origin story

In 2010, the FoundationDB team asked themselves a question: how do you reliably test a distributed database under real-world failure conditions? There was no established blueprint1—just a hunch from Dave Scherer, one of the co-founders.

Years earlier, while building a BigQuery-style system at Visual Sciences, Scherer had created a model-based simulation to study performance and tail latency. But he quickly ran into a fundamental problem: how could he verify that the model and implementation were in sync?

Fast forward to FoundationDB, they flipped the idea on its head—what if the simulation was the implementation? What if you could run the actual database deterministically, inject faults, and explore edge cases in a repeatable way?

That idea became Simulation, FoundationDB’s deterministic test harness. It let them run the full system at 10x speed, simulate crashes and partitions, and verify correctness across thousands of parallel timelines. It was a game-changer.

“Simulation is able to conduct a deterministic simulation of an entire FoundationDB cluster within a single-threaded process. Determinism is crucial in that it allows perfect repeatability of a simulated run, facilitating controlled experiments to home in on issues.”

“It seems unlikely that we would have been able to build FoundationDB without this technology.”

FoundationDB documentation

These days, we call this pattern Deterministic Simulation Testing (DST), and it’s become a foundational technique for building reliable infrastructure. Since FoundationDB paved the way, teams like RisingWave, TigerBeetle, and Resonate have all built their own DST systems—and seen the same payoff: deep confidence in system correctness.

But rolling your own DST isn’t easy.

You need to design your entire system to eliminate sources of non-determinism—IO, time, randomness, thread interleaving… You have to build a test harness that can orchestrate workloads, inject faults, and explore timelines. Then you need infrastructure to snapshot, replay, and analyze huge volumes of simulation data.2 It’s powerful, but expensive.

In 2018, the same team that built FoundationDB set out on perhaps their most ambitious project yet: A general-purpose DST platform for everyone.

They called it Antithesis.

Antithesis brings this approach to any software stack. It provides determinism, fault injection, test composition, guidance, and property assertions out of the box—no need to rebuild your system from scratch. You package your service in Docker, define a few expectations, and Antithesis starts exploring.

Let me show you what that looks like in practice.

#Graft: case study

Working with Antithesis fundamentally changed how I approach testing. I no longer spend time scripting endless edge cases by hand—instead, I define properties3: simple, declarative rules about how the system should behave. Antithesis does the rest, exploring the state space, injecting faults, and surfacing unexpected behavior I never would have thought to test.

It turned testing into a dialogue. Instead of asserting what I already knew, I was constantly learning what I didn’t. That feedback loop exposed subtle design flaws, false assumptions, and concurrency edge cases buried deep in Graft’s replication engine.

And it found real bugs—ship-stopping ones. Let’s take a look at a few of them.

Bug 1: Incorrect rollback triggered by chained faults during recovery

Antithesis exposed an intricate edge case involving sequential faults: a crash during commit followed by a network timeout during recovery. This combination led Graft to incorrectly reset internal replication pointers (pending_sync and last_sync). As a result, essential recovery state was discarded, causing the client to falsely perceive that recovery was complete.

Why it was tricky: This bug required the sequential occurrence of specific faults while other clients were able to concurrently modify the Graft Volume.

Bug 2: Segment corruption caused by abrupt request termination

Antithesis identified a subtle4 concurrency bug related to Graft’s HTTP request handling. When client requests terminated abruptly (such as during sudden disconnects), internal locks could be prematurely released. Perfectly timed writes could exploit this window of opportunity resulting in data corruption.

Why it was tricky: This scenario required precise timing between parallel requests from different clients while simultaneously ensuring that the writes overlapped.

Bug 3: Stale data served by SQLite page cache due to identical counters

Antithesis discovered a subtle caching issue related to how SQLite invalidates its page cache. Under certain conditions—when independent Graft clients coincidentally had matching file change counters—cached pages could be mistakenly reused after synchronization. This led to perceived database corruption as SQLite could read pages in inconsistent states.

Why it was tricky: Multiple Graft clients had to synchronize with one another while having matching file change counters.

At this point, you’re probably wondering: how exactly does Antithesis manage to do this? Let’s dive into the details.

#The Antithesis hypervisor

To run arbitrary software deterministically, Antithesis had to solve a hard problem: how do you eliminate all sources of non-determinism without modifying the software itself?

Their answer was to build a hypervisor—a low-level virtual machine that emulates a fully deterministic computer. It runs your entire software stack5 in a hermetic environment, while eliminating all sources of non-determinism including time, randomness, thread ordering, and IO.

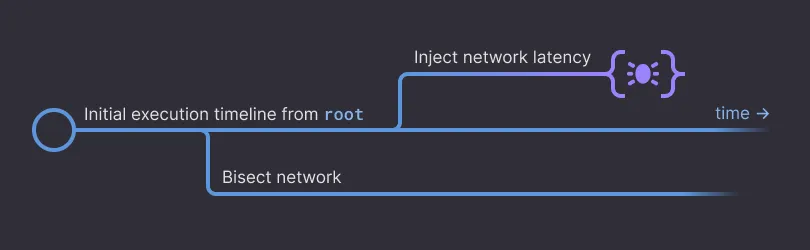

This transforms your service into a pure state machine—one where the same inputs always produce the same outputs, and every timeline can be precisely replayed. But deterministic doesn’t mean simple. The state space of even a modest system is enormous6.

To explore this space, Antithesis needs the ability to jump backward in time. That’s easy in a pure state machine: just record the initial state and the sequence of operations. From there, it can branch execution at key decision points, revisiting them with alternate faults, timing, and events.

“Antithesis frequently returns to interesting decision points to make new choices, and explores multiple timelines in parallel. This effectively allows us to reach a fork in the road and take both paths.”

Antithesis documentation

This is what unlocks the multiverse of possible software lifetimes—and gives Antithesis its power. But determinism is only the foundation. To actually find bugs, you need a way to shake the system, generate realistic scenarios, and focus in on the paths that matter.

That’s where fault injection, test composition, and guidance come in.

#Fault injection

Antithesis injects real-world faults into your software to uncover hidden bugs—similar to chaos testing, but with a crucial advantage: determinism. Unlike traditional chaos testing, every Antithesis scenario is perfectly reproducible, accelerating debugging and enabling precise root-cause analysis.

The injected faults go far beyond basic network issues. Yes, it can simulate packet loss, latency, and network partitions. But it also targets deeper failure modes:

- System faults like node hangs, throttling, and terminations simulate overloaded or crashing machines.

- Concurrency faults such as thread pausing and CPU modulation perturb scheduling to surface race conditions.

- Time faults like clock jitter test assumptions around timeouts, daylight savings time, leap seconds, and operational errors.

This broad, repeatable fault model pushes your software far off the happy path—exactly where most bugs like to hide.

While faults simulate failures in the environment, they are only half of the equation. They need to be combined with domain-specific scenarios which drive your software through meaningful execution paths. For that, we need to discuss the Antithesis test composer.

#Test composition

Think of the Antithesis test composer as an automated integration test builder. You provide the test composer with a set of scripts, called test commands. Each test command does something to the system under test. For example, a test command might simulate a user running a specific workload. Or it may trigger a rare event like failing over to a database replica.

The test composer uses this collection of test commands to generate random scenarios. In effect, rather than writing rigid end-to-end integration tests—the test composer is able to generate nearly infinite variations of timelines by mixing and matching test commands together.

This combination of randomly generated timelines and fault injection forms the foundation of the multiverse. But, we are still just randomly exploring. The computational equivalent of tossing ideas into the wind.

We need a way to tell Antithesis when it’s on the right path.

#Guidance

The true magic of Antithesis comes in the form of “guidance”. If you think about the multiverse of timelines your software can experience - guidance is like lightposts which illuminate paths to interesting portions of the state space.

Guidance comes in the form of “asserts”7, however, unlike asserts you may be familiar with, rather than crashing your software they inform Antithesis that a particularly interesting or erroneous event has occurred and it may be valuable to spend some more time exploring branching timelines starting at these lightposts.

There are 5 different kinds of asserts currently available:

AssertSometimes: assert that a given condition is true in at least one timeline. This property is so powerful there is an entire doc about it.AssertAlways: assert that a given condition is true every time the assertion is encountered and that it is encountered at least once.AssertAlwaysOrUnreachable: assert that a given condition is true every time the assertion is encountered or that the assertion is never encountered.AssertReachable: this line of code must be encountered in at least one timeline.AssertUnreachable: this line of code is never encountered in any timeline.

After liberally adding these asserts throughout your codebase and test commands, Antithesis uses them to explore more interesting parts of your software’s state space.

As an example, let’s say you’re building a distributed transactional storage engine. You add a precept::maybe_fault in the middle of your commit process, which internally calls AssertReachable when the fault triggers. Antithesis notices the AssertReachable and starts executing many parallel test scenarios starting immediately after the fault. In one of those scenarios, Antithesis causes a network partition, separating the faulting client from the server in the middle of recovery. Moments later you’re fixing a ship-stopping bug and breathing a huge sigh of relief.

#DST + guidance = safer software

By combining deterministic simulation, fault injection, test composition, guidance, and the multiverse, Antithesis makes it possible to fuzz test complex systems with precision and depth—without the massive overhead of building your own DST from scratch.

But Antithesis isn’t just a bug finder—it reshapes how we think about testing. Instead of hand-crafting brittle integration tests, developers define high-level properties and let Antithesis explore the system. A passing property builds confidence. A failing one exposes gaps—missing coverage, incorrect assumptions, blind spots in your mental model.

Best of all, this process compounds. Each new property makes exploration smarter. Each new test command expands the accessible subset of the multiverse. Over time, your test suite evolves into something greater than the sum of its parts: a dynamic system that continuously pushes itself toward correctness.

It’s a paradigm shift—property-based fuzz testing scaled to entire systems. That power used to require bespoke infrastructure—now it’s a product.

If you’re building critical software and want to test like the best teams in the industry, visit antithesis.com to learn more.

#Learn more about Graft

Check out the last blog post called “Stop syncing everything” for a deep dive on Graft. Or go straight to GitHub—we are looking for contributors and users alike!

If you’d like to chat about Graft, open a discussion, join the Discord or send me an email. I’d love your feedback on Graft’s approach to lazy, partial edge replication.

I’m also planning on launching a Graft Managed Service. If you’d like to join the waitlist, you can sign up here.

#Footnotes

-

Other approaches existed—property-based testing, fuzzers, network simulators, formal models—but none could simulate a full distributed system deterministically. ↩

-

FoundationDB estimates that they have run more than one trillion CPU-hours of simulation testing. Imagine reading those logs. ↩

-

Antithesis acts like a scaled up property-based testing system. ↩

-

This bug appeared in 64 out of 149,784 timelines. That’s a 0.04% failure rate!! ↩

-

You pack your software up in Docker containers and then wrap it in a Docker Compose file. Easy peasy! ↩

-

Now imagine the state-machine for a vibe-coded Electron app. ↩

-

Antithesis calls them asserts, however I find that term confusing as it’s highly overloaded. I wrote an entire library called precept in order to use the term “expect” instead. Oh and some other fun features like custom fault injection. ↩